Digital World: What is Apache Kafka and How to Set It Up

In today’s fast-paced digital world, real-time data streaming is essential. Whether you’re tracking user activity, processing financial transactions, or managing IoT devices, you need a reliable data pipeline. This is where Apache Kafka comes in. But what exactly is Kafka, and how do you set it up from scratch? Let’s break it down.

What is Apache Kafka?

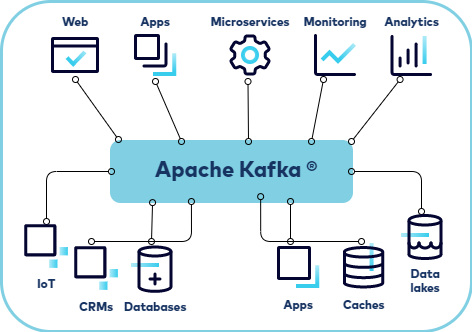

Apache Kafka is an open-source distributed event streaming platform designed for high-throughput, low-latency data processing. Originally developed by LinkedIn, Kafka is now part of the Apache Software Foundation. It acts as a central hub where data flows from various sources (called producers) to multiple destinations (called consumers).

Kafka is widely used for building real-time data pipelines, log aggregation, and event-driven architectures. Its main components include:

- Producers – Publish data to Kafka topics.

- Consumers – Subscribe to topics and process the incoming data.

- Topics – Categories or feed names to which records are sent.

- Brokers – Kafka servers that store and serve data.

- Zookeeper – Manages and coordinates Kafka brokers (though Kafka is transitioning away from needing Zookeeper).

Key Features

- High throughput: Handles millions of messages per second.

- Scalability: Easily scales horizontally across many servers.

- Durability: Stores data reliably using distributed storage.

- Fault-tolerance: Keeps running even if some components fail.

How to Set Up Apache Kafka (Step-by-Step Guide)

Setting up Kafka may sound complex, but you can get a basic cluster running in a few steps.

Step 1: Install Java

Kafka requires Java 8 or later. You can check your version by running:

java -version

If it’s not installed, download it from the Oracle or OpenJDK website.

Step 2: Download Kafka

Head over to the Apache Kafka Downloads page and grab the latest binary release. Extract the archive:

tar -xzf kafka_2.13-<version>.tgz

cd kafka_2.13-<version>

Step 3: Start Zookeeper

Kafka needs Zookeeper to manage its cluster state. Start it with:

bin/zookeeper-server-start.sh config/zookeeper.properties

Zookeeper runs on port 2181 by default.

Step 4: Start Kafka Broker

With Zookeeper running, launch the Kafka broker:

bin/kafka-server-start.sh config/server.properties

Kafka uses port 9092 by default.

Step 5: Create a Topic

Topics are where data lives. Create one like this:

bin/kafka-topics.sh –create –topic test-topic –bootstrap-server localhost:9092 –partitions 1 –replication-factor 1

Also read- HashMap: Internal Mechanics, Algorithms, and the Equals-HashCode Contract

Step 6: Start a Producer

Send messages to your topic:

bin/kafka-console-producer.sh –topic test-topic –bootstrap-server localhost:9092

Type messages and hit Enter to send them.

Step 7: Start a Consumer

In another terminal, start a consumer to read those messages:

bin/kafka-console-consumer.sh –topic test-topic –from-beginning –bootstrap-server localhost:9092

You’ll see the messages appear in real-time.

Apache Kafka is a game-changer for real-time data processing. Its distributed nature, fault-tolerance, and high throughput make it ideal for modern applications. Whether you’re building a logging system, data pipeline, or real-time analytics engine, Kafka has you covered.

By following the simple setup steps above, you can get started with Kafka in minutes. Once you’re comfortable with the basics, you can explore advanced features like Kafka Connect, Schema Registry, and Kafka Streams.